From the Computational Photography resource link, select a topic of interest. Give a brief report, and describe how such a camera might advance your own artistic work

2D to 3D Conversion of Sports Content - Lars Schnyder, Oliver Wang, Aljoscha Smolic

Disney Research Zürich and University of California, Santa Cruz

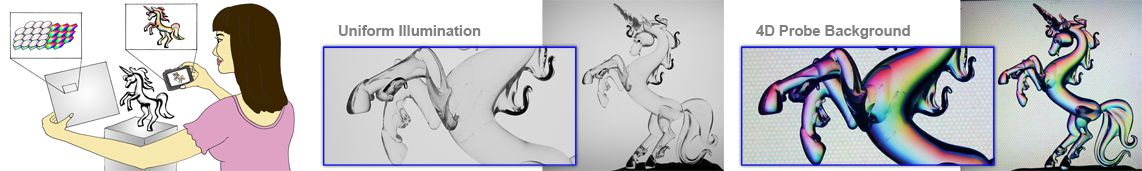

This research is focused on the problem of conversion of images given from a monoscopic single camera to a sterescopic 3d view.

In particular is presented a system to automatically create a stereoscopic video from monoscopic footage of field-based sports, using a technique that constructs per-shots panoramas to ensure depth in video reconstruction.

This method produce sytnhesized 3d shots using priors images, that are almost indistinguishable from truth footage.

The diffusion of 3d-at-home systems has some problems like an insufficient amount of available contents, few broadcasts, because the creation of stereoscopic images is still very expensive and difficult, so conversion from 2d to 3d is a very important alternative also for the possibility of conversion of existing contents.

The typical conversion pipeline consists of estimating the depth for each pixel, projecting them into a new view, and then filling in holes that appear around object boundaries. Each of these steps is difficult and, in the general case, requires large amounts of manual input, making it unsuitable for live broadcast. Existing automatic methods cannot guarantee the quality and reliability that are necessary for TV broadcast applications. Sports games are a prime candidate for stereoscopic viewing, as they are extremely popular, and can benefit from the increased realism that stereoscopic viewing provides.

This method takes advantage of prior knowledge, such as known field geometry and appearance, player heights, and orientation and it creates a temporally consistent depth impression by reconstructing a background panorama with depth for each shot (a series of sequential frames belonging to the same camera) and modelling players as billboards.

The result is a rapid, automatic, temporally stable and robust 2D to 3D conversion that can be

used in conjunction with our method to provide full 3D viewing of a sporting event at reduced cost and this can be really interesting also for photography and consumer cameras.

While it is possible to notice some differences between footage, such as the graphic overlay, most viewers were not able to distinguish the two videos.

The implementation computes multiple passes to create homographies, panorama and stereo frames, running unoptimized research-quality code on a standard personal computer, is possible to achieve per frame computations of 6-8 seconds.

Researchers state taht this does not depend on the total video length, and it would be possible to run our algorithm on streaming footage given a short delay and increased processing power.