MAT 255 Techniques, History & Aesthetics of the Computational Photographic Image

https://www.mat.ucsb.edu/~g.legrady/aca ... s255b.html

Please provide a response to any of the material covered in this week's two presentations by clicking on "Post Reply". Consider this to be a journal to be viewed by class members. The idea is to share thoughts, other information through links, anything that may be of interest to you and the topic at hand.

Report for this topic is due by May 6, 2021 but each of your submissions can be updated throughout the length of the course.

Report 4: Volumetric data, Computational Photography

-

kevinclancy

- Posts: 6

- Joined: Thu Apr 01, 2021 5:33 pm

Re: Report 4: Volumetric data, Computational Photography

I thoroughly enjoyed the presentation by visiting artist Weidi Zhang. Astro (2021), the 360 spherical projection at the planetarium, was such a logical extension of the format of 360 stereoscopic VR, but I had never made that leap in my mind. In my explorations of photogrammetry, I have been striving for uncanny photorealism, and have been slightly disappointed when my consumer-grade experiments have fallen short. What I really appreciated about Zhang’s work is that she was not concerned at all with the fidelity of the initial scan. She was primarily concerned with the plotting of usable data within a point cloud, in the potential of the scan. The scan itself is not the end result, but rather a raw material to be digitally molded, abstracted, and glitched.

Daniel Steegman,Phantom (Kingdom of all the animals and all the beasts is my name), (2015)

The point clouds and harsh monochrome of Zhang’s work made me think back to one of my first real aesthetic experiences of VR, Daniel Steegman’s Phantom (Kingdom of all the animals and all the beasts is my name)(2015), part of the New Museum Triennial: Surround Audience. I had seen a few VR pieces at that point, but they were all kind of gimmicky and heavily focused on the medium itself, rather than a transcendental aesthetic experience. Phantom was one of the first pieces that really made me feel something through VR. I had to wait in line for a while, watching multiple people stumble around the exhibition space with the Oculus Rift headset on. The entire time in line I was self-conscious of how dumb I would look to the rest of the room, but as soon as I put the headset on I was fully immersed in another world. The VR piece was a photogrammetry scan of the lush flora of a Brazilian Rainforest rendered in stark white point cloud dots on a midnight black background. It was incredibly simple, but that simplicity or refinement (like Zhang’s work) gave it a ghostly, transporting quality. I wandered around in VR space for a while examining the diversity of plant life, spatially teleported to a place thousands of miles from where I stood. There was a moment of profound realization when I thought to look down at my body in this virtual space, and had the out of body experience that I had no physical form, and was totally enmeshed in the point cloud of a massive tree trunk.

Our exploration of photogrammetry also made me think of the work of two artists I met at Carnegie Mellon University, Steve Gurysh and Claire Hentschker. Steve Gurysh is one of my studio mates, and he introduced me to the process, toolsets, and potential of photogrammetry. In his project, Parts per trillion (2016-2019), Gurysh 3D scanned a collection of graffitied rocks on the banks of the Bow River in Calgary, digitally embossed the graffiti, 3D printed the resulting scans, slip cast the prints, and then pit-fired the ceramics ceremonially along the banks of the Bow River. The linked video elegantly documents the process (https://vimeo.com/382237212).

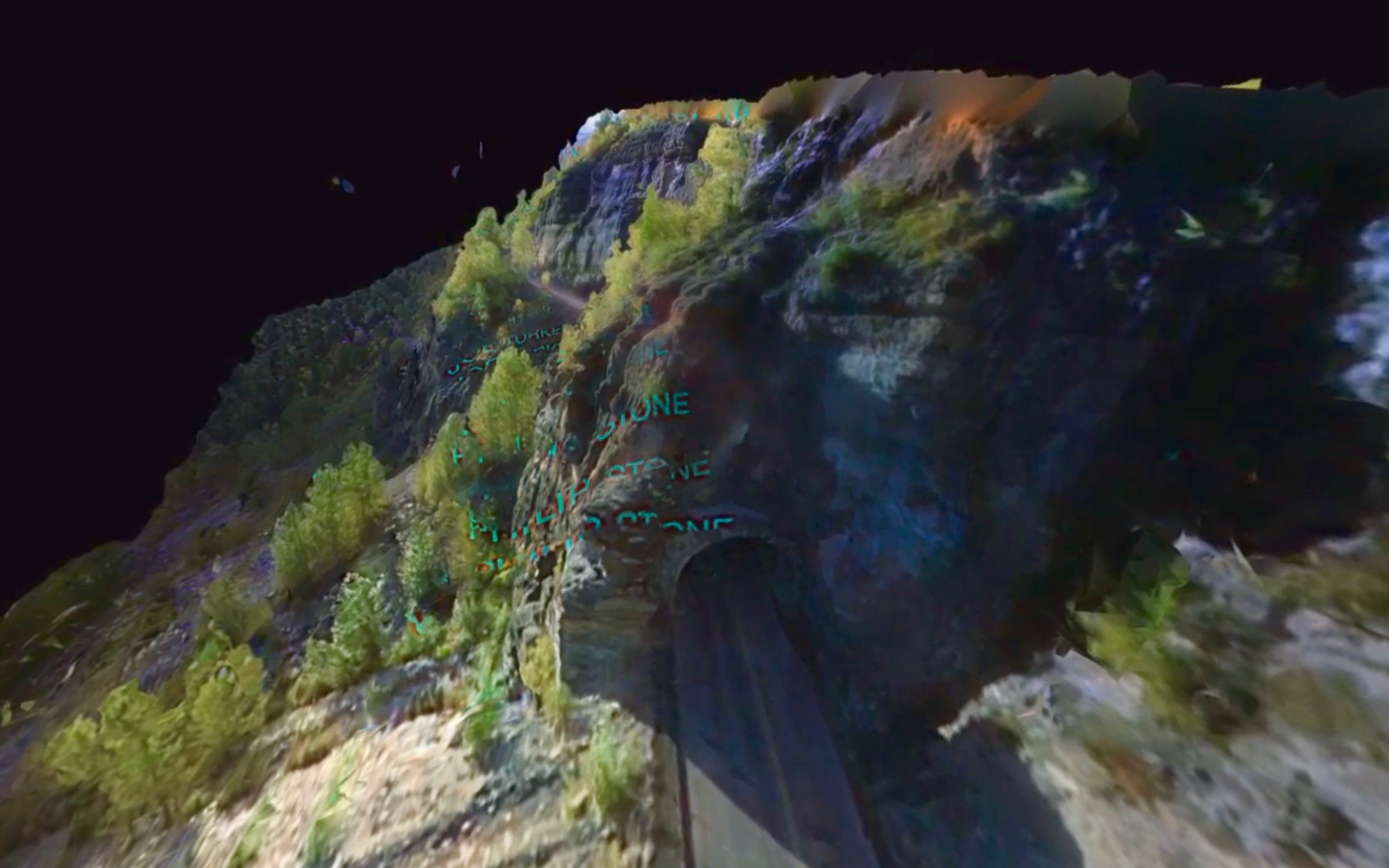

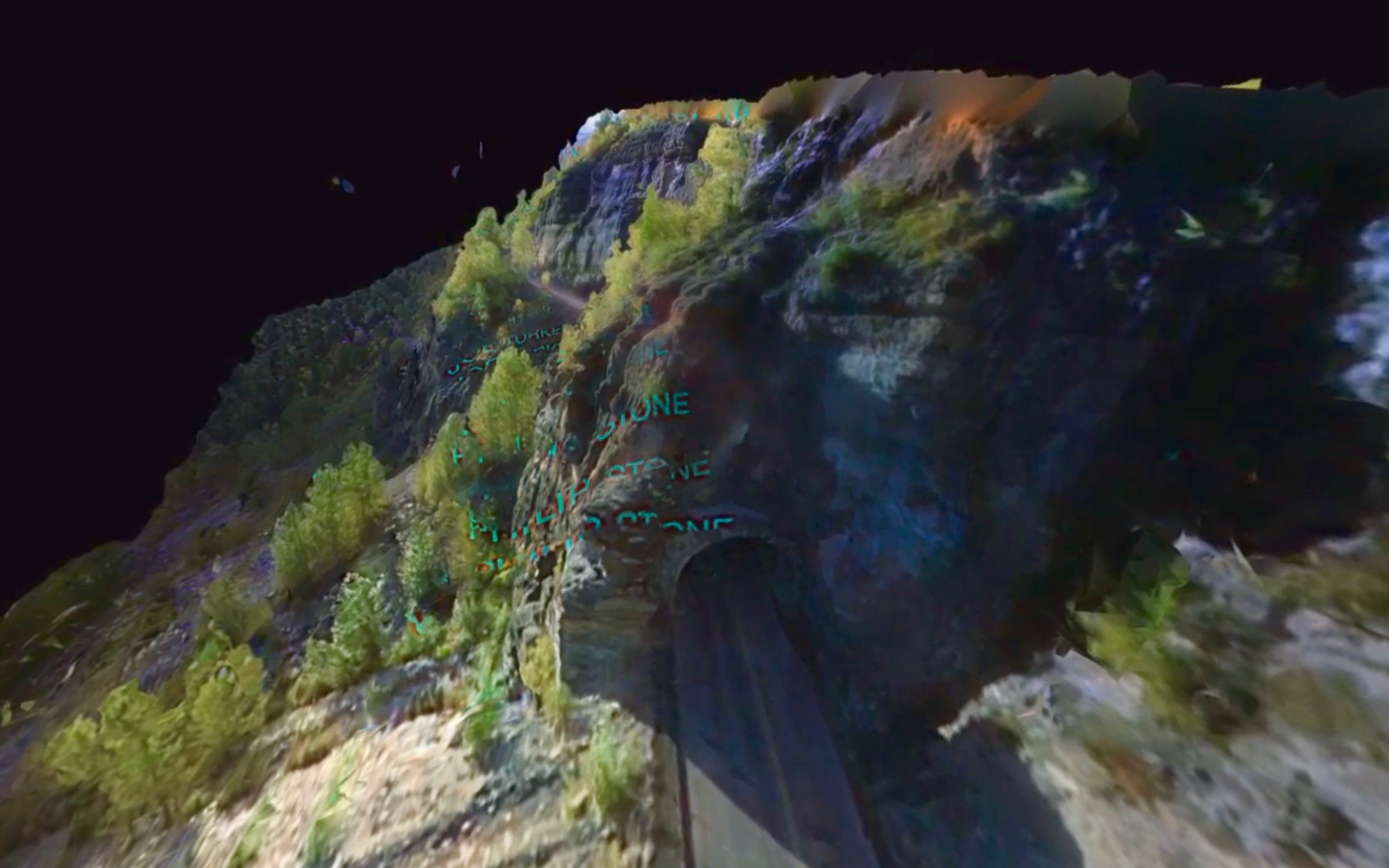

Claire Hentschker, Shining360 (2016), still from 360 Video

Gurysh’s work represents the fine detail I am seeking to achieve with photogrammetry and digital fabrication, whereas Hentschker’s work with photogrammetry and 360 video embraces the phantasmic glitches inherent in the process. These ghostly traces reinforce conceptual content as she eerily floats through melty scans of abandoned malls in Merch Mulch (2017), and her found footage reconstruction of a now demolished rollercoaster in Ghost Coaster (2019). One of her most notable works Shining360 (2016) features haunting photogrammetric 360 video generated from Stanley Kubrick’s film “The Shining”. The iconic opening scene driving through the mountains to the hotel leaves glitched artifacts as the title credits are spatialized and staggered over the unfolding landscape. There are additional flares of ethereal color when objects or actors are moving at a rate faster than the capture of the software, resulting in blood red streaks in the labyrinth from Danny’s sweatshirt.

Links:

Daniel Steegman Phantom (Kingdom of all the animals and all the beasts is my name) https://scanlabprojects.co.uk/work/phantom/

Claire Hentschker Merch Mulch http://www.clairesophie.com/merch-mulch

Claire Hentschker Shining360 http://www.clairesophie.com/shining360excerpt

Steve Gurysh Parts per trillion http://www.stevegurysh.com/ppt.html

Laurie Anderson at MASS MoCA https://massmoca.org/event/laurie-anderson/

Oliver Laric http://oliverlaric.com/

Daniel Steegman,Phantom (Kingdom of all the animals and all the beasts is my name), (2015)

The point clouds and harsh monochrome of Zhang’s work made me think back to one of my first real aesthetic experiences of VR, Daniel Steegman’s Phantom (Kingdom of all the animals and all the beasts is my name)(2015), part of the New Museum Triennial: Surround Audience. I had seen a few VR pieces at that point, but they were all kind of gimmicky and heavily focused on the medium itself, rather than a transcendental aesthetic experience. Phantom was one of the first pieces that really made me feel something through VR. I had to wait in line for a while, watching multiple people stumble around the exhibition space with the Oculus Rift headset on. The entire time in line I was self-conscious of how dumb I would look to the rest of the room, but as soon as I put the headset on I was fully immersed in another world. The VR piece was a photogrammetry scan of the lush flora of a Brazilian Rainforest rendered in stark white point cloud dots on a midnight black background. It was incredibly simple, but that simplicity or refinement (like Zhang’s work) gave it a ghostly, transporting quality. I wandered around in VR space for a while examining the diversity of plant life, spatially teleported to a place thousands of miles from where I stood. There was a moment of profound realization when I thought to look down at my body in this virtual space, and had the out of body experience that I had no physical form, and was totally enmeshed in the point cloud of a massive tree trunk.

Our exploration of photogrammetry also made me think of the work of two artists I met at Carnegie Mellon University, Steve Gurysh and Claire Hentschker. Steve Gurysh is one of my studio mates, and he introduced me to the process, toolsets, and potential of photogrammetry. In his project, Parts per trillion (2016-2019), Gurysh 3D scanned a collection of graffitied rocks on the banks of the Bow River in Calgary, digitally embossed the graffiti, 3D printed the resulting scans, slip cast the prints, and then pit-fired the ceramics ceremonially along the banks of the Bow River. The linked video elegantly documents the process (https://vimeo.com/382237212).

Claire Hentschker, Shining360 (2016), still from 360 Video

Gurysh’s work represents the fine detail I am seeking to achieve with photogrammetry and digital fabrication, whereas Hentschker’s work with photogrammetry and 360 video embraces the phantasmic glitches inherent in the process. These ghostly traces reinforce conceptual content as she eerily floats through melty scans of abandoned malls in Merch Mulch (2017), and her found footage reconstruction of a now demolished rollercoaster in Ghost Coaster (2019). One of her most notable works Shining360 (2016) features haunting photogrammetric 360 video generated from Stanley Kubrick’s film “The Shining”. The iconic opening scene driving through the mountains to the hotel leaves glitched artifacts as the title credits are spatialized and staggered over the unfolding landscape. There are additional flares of ethereal color when objects or actors are moving at a rate faster than the capture of the software, resulting in blood red streaks in the labyrinth from Danny’s sweatshirt.

Links:

Daniel Steegman Phantom (Kingdom of all the animals and all the beasts is my name) https://scanlabprojects.co.uk/work/phantom/

Claire Hentschker Merch Mulch http://www.clairesophie.com/merch-mulch

Claire Hentschker Shining360 http://www.clairesophie.com/shining360excerpt

Steve Gurysh Parts per trillion http://www.stevegurysh.com/ppt.html

Laurie Anderson at MASS MoCA https://massmoca.org/event/laurie-anderson/

Oliver Laric http://oliverlaric.com/

-

alexiskrasnoff

- Posts: 6

- Joined: Thu Apr 01, 2021 5:32 pm

Re: Report 4: Volumetric data, Computational Photography

I am not sure if this first thing that I want to talk about really fits into the category of computational photography as most of the actual technique is being done in the physical world and it's just taking advantage of the way modern cameras function, but talking about the cameras that could take pictures around corners by analyzing the light bounces reminded me of this really interesting video made by Tom Scott in collaboration with Seb Lee-Delisle: https://www.youtube.com/watch?v=tozuzV5YZ7U It's only about 5 minutes so I recommend watching it, it explains how they're creating really cool practical laser effects with code and by taking advantage of the camera's rolling shutter.

This rolling shutter effect makes me think of a more fun/goofy camera technique I worked with last quarter that feels like it could be considered computational photography? The app TikTok has a lot of real-time filters that alter videos as you're taking them, and one in particular will scan across the screen, freezing it line by line, almost like an extremely slow rolling shutter. By moving around while this is happening, you can create some really funky effects. Here are a few self portraits I took with this technique

as well as the sketches that I made from them! These were studies for a larger painting

I also think it's interesting how a lot of computational photography techniques have become so commonplace that we take them for granted, like portrait mode or HDR mode on phone cameras.

This rolling shutter effect makes me think of a more fun/goofy camera technique I worked with last quarter that feels like it could be considered computational photography? The app TikTok has a lot of real-time filters that alter videos as you're taking them, and one in particular will scan across the screen, freezing it line by line, almost like an extremely slow rolling shutter. By moving around while this is happening, you can create some really funky effects. Here are a few self portraits I took with this technique

as well as the sketches that I made from them! These were studies for a larger painting

I also think it's interesting how a lot of computational photography techniques have become so commonplace that we take them for granted, like portrait mode or HDR mode on phone cameras.

Re: Report 4: Volumetric data, Computational Photography

Wow Nice work Alexis haha :D

In this week, I've explored coded aperture, which is one of the forms of computational photography.

The point spread function (PSF) is the response of an imaging system to a point source or point object. This video explains how the PSF function can be different for the lens with coded aperture: https://www.youtube.com/watch?v=rSrbrVNk2sM

As an imaging system introduces distortion, image restoration aims to reduce the distortion by post-processing the image. If we know the PSF function of blur, you can recover a clean image as you can see in this video: https://www.youtube.com/watch?v=YhLF95crTWs

The reason for employing coded apertures is the fact that it allows the PSF to be engineered for specific purposes. With the modified PSF, a coded exposure camera can preserve high spatial frequencies in a motion-blurred image and make the deblurring process well-posed. In addition, the PSF can be engineered to enhance the performance of depth estimation techniques such as depth-from-defocus. Coded aperture methods have been applied in many fields such as astronomy, microscopy, and tomography. The success of coded aperture methods relies on selecting the optimal coded aperture for a particular application.

The superiority of the coded aperture can clearly be seen when comparing the deblurred images below. Reference

[1] Raskar, Ramesh, Amit Agrawal, and Jack Tumblin. "Coded exposure photography: motion deblurring using fluttered shutter." ACM SIGGRAPH 2006 Papers. 2006. 795-804.

In this week, I've explored coded aperture, which is one of the forms of computational photography.

The point spread function (PSF) is the response of an imaging system to a point source or point object. This video explains how the PSF function can be different for the lens with coded aperture: https://www.youtube.com/watch?v=rSrbrVNk2sM

As an imaging system introduces distortion, image restoration aims to reduce the distortion by post-processing the image. If we know the PSF function of blur, you can recover a clean image as you can see in this video: https://www.youtube.com/watch?v=YhLF95crTWs

The reason for employing coded apertures is the fact that it allows the PSF to be engineered for specific purposes. With the modified PSF, a coded exposure camera can preserve high spatial frequencies in a motion-blurred image and make the deblurring process well-posed. In addition, the PSF can be engineered to enhance the performance of depth estimation techniques such as depth-from-defocus. Coded aperture methods have been applied in many fields such as astronomy, microscopy, and tomography. The success of coded aperture methods relies on selecting the optimal coded aperture for a particular application.

The superiority of the coded aperture can clearly be seen when comparing the deblurred images below. Reference

[1] Raskar, Ramesh, Amit Agrawal, and Jack Tumblin. "Coded exposure photography: motion deblurring using fluttered shutter." ACM SIGGRAPH 2006 Papers. 2006. 795-804.