Report 3: Material / Machine-Generated Images, Data Noise

MAT255 Techniques, History & Aesthetics of the Computational Photographic Image

https://www.mat.ucsb.edu/~g.legrady/aca ... s255b.html

Please provide a response to any of the material covered in this week's two presentations by clicking on "Post Reply". Consider this to be a journal to be viewed by class members. The idea is to share thoughts, other information through links, anything that may be of interest to you and the topic at hand.

Report for this topic is due by April 29 but each of your submissions can be updated throughout the length of the course.

Report 3: Material / Machine-Generated Images, Data Noise

-

siennahelena

- Posts: 8

- Joined: Tue Mar 29, 2022 3:33 pm

Re: Report 3: Material / Machine-Generated Images, Data Noise

For this week’s post, I thought to continue my reflections on images, power, and Internet platforms. Of particular interest to me was our discussion on how to blur an image. Specifically, during this conversation, I immediately thought of blurring is often used as a mechanism for censorship or privacy. For example, network television shows often blur sexually related images or blur individuals to anonymize their identities. Blurring is but one of the ways to censor or anonymize an image; other image processing techniques include pixelization (which reduces the resolution) or covering part of an image using a black bar. Colloquially, these image processing techniques are termed “fogging”.

This image is actually from researchers More, Souza Wehrmann, and Barros (2018) who developed an AI to censor nude women by covering explicit regions with swimsuits

In part, why I am interested in fogging on Internet media platforms because of the idea of a user’s right to privacy. As one example, Google Maps allows users the ability to request that images of their homes or persons be “fogged” in Street View. This feature started in 2008, in response to several privacy concerns about people being photographed and identifiable on Street View without their knowledge or permission. However, blurring or pixelating as a measure of privacy is also easily reversible. Wired published an article summarizing how several AIs software can recognize faces even when they are blurred or pixelated https://www.wired.com/2016/09/machine-l ... hers-show/. Thus, a user’s request to be anonymous does not necessarily mean that these requests will be fulfilled.

From an aesthetic point of view, I am also intrigued by the power of suggestion that comes from “fogging”. Specifically, fogging a specific area of an image, whether through blurring, pixelating, or covering with a black a bar, suggests that information is being purposefully hidden. It draws our attention to that missing bit of information – why is this information being withheld? - what am I not allowed to see and why? This drastically differs from an alteration of an image where information is completely removed. With an image in which information is completely removed, the same questions are not as obviously present. We don’t immediately ask what is being withheld.

Below, I present three images of the same photo of my friend from a vacation. The first photo is original, in the second photo, I blurred her face, and in the third photo, I removed her completely. Applying the idea of a person’s right to privacy, I wonder for my friend if she wanted to be anonymized, which of these photos she would want to be posted? Probably the last one. However, the last photo is also the least truthful to the original. So, if I, as the poster of the image, wanted to be factual then it would be inaccurate for me to post the photo where she has been fully removed. Indeed, another Wired article points to the idea that blurring and censoring images could be anti-journalistic https://www.wired.com/story/opinion-blu ... nti-human/. Thus, whose rights should be respected when it comes to anonymity and privacy?

References:

This image is actually from researchers More, Souza Wehrmann, and Barros (2018) who developed an AI to censor nude women by covering explicit regions with swimsuits

In part, why I am interested in fogging on Internet media platforms because of the idea of a user’s right to privacy. As one example, Google Maps allows users the ability to request that images of their homes or persons be “fogged” in Street View. This feature started in 2008, in response to several privacy concerns about people being photographed and identifiable on Street View without their knowledge or permission. However, blurring or pixelating as a measure of privacy is also easily reversible. Wired published an article summarizing how several AIs software can recognize faces even when they are blurred or pixelated https://www.wired.com/2016/09/machine-l ... hers-show/. Thus, a user’s request to be anonymous does not necessarily mean that these requests will be fulfilled.

From an aesthetic point of view, I am also intrigued by the power of suggestion that comes from “fogging”. Specifically, fogging a specific area of an image, whether through blurring, pixelating, or covering with a black a bar, suggests that information is being purposefully hidden. It draws our attention to that missing bit of information – why is this information being withheld? - what am I not allowed to see and why? This drastically differs from an alteration of an image where information is completely removed. With an image in which information is completely removed, the same questions are not as obviously present. We don’t immediately ask what is being withheld.

Below, I present three images of the same photo of my friend from a vacation. The first photo is original, in the second photo, I blurred her face, and in the third photo, I removed her completely. Applying the idea of a person’s right to privacy, I wonder for my friend if she wanted to be anonymized, which of these photos she would want to be posted? Probably the last one. However, the last photo is also the least truthful to the original. So, if I, as the poster of the image, wanted to be factual then it would be inaccurate for me to post the photo where she has been fully removed. Indeed, another Wired article points to the idea that blurring and censoring images could be anti-journalistic https://www.wired.com/story/opinion-blu ... nti-human/. Thus, whose rights should be respected when it comes to anonymity and privacy?

References:

- M. D. More, D. M. Souza, J. Wehrmann and R. C. Barros, "Seamless Nudity Censorship: an Image-to-Image Translation Approach based on Adversarial Training," 2018 International Joint Conference on Neural Networks (IJCNN), 2018, pp. 1-8, doi: 10.1109/IJCNN.2018.8489407.

-

ashleybruce

- Posts: 11

- Joined: Thu Jan 07, 2021 2:59 pm

Re: Report 3: Material / Machine-Generated Images, Data Noise

Due to the nature of this week’s topic being noise, I thought I would touch a bit on noise in machine learning models and image classification algorithms.

Deep learning nowadays can be found in many applications, such as self driven cars, medical imaging, online chat support, and surveillance. As these concepts become more and more integrated into daily life, having neural networks that work is imperative, thus there is an increased necessity for testing these neural networks. This usually consists of training the models with images and increasing the noise levels to make it more difficult to classify correctly, such as below: The above picture is from a dataset of handwritten numbers and noise has been continuously added to the original image to try and test whether the neural network can still correctly label the image as a 1.

While this example is fairly straightforward, the implications of it can be seen especially when looking at how self driving cars work. When given a picture of a car with nothing else around it, it’s relatively easy for a machine to label it as a car. But if we introduce other things around it, this becomes an increasingly difficult task. This is why machine learning algorithms need a ton of labeled data to be trained correctly, and why when we are asked to verify we are not a robot, they ask us to identify “all the images with trains in them.”

A lot of the time, people tend to think of “noisy images” as bad. The noise is taking away from the subject in question and contributing to a sense of disorder in images, making them not as aesthetically appealing.

While this may not be as pretty from an aesthetic perspective, using these in machine learning could make a model more robust and better able to classify things during non-ideal conditions.

Deep learning nowadays can be found in many applications, such as self driven cars, medical imaging, online chat support, and surveillance. As these concepts become more and more integrated into daily life, having neural networks that work is imperative, thus there is an increased necessity for testing these neural networks. This usually consists of training the models with images and increasing the noise levels to make it more difficult to classify correctly, such as below: The above picture is from a dataset of handwritten numbers and noise has been continuously added to the original image to try and test whether the neural network can still correctly label the image as a 1.

While this example is fairly straightforward, the implications of it can be seen especially when looking at how self driving cars work. When given a picture of a car with nothing else around it, it’s relatively easy for a machine to label it as a car. But if we introduce other things around it, this becomes an increasingly difficult task. This is why machine learning algorithms need a ton of labeled data to be trained correctly, and why when we are asked to verify we are not a robot, they ask us to identify “all the images with trains in them.”

A lot of the time, people tend to think of “noisy images” as bad. The noise is taking away from the subject in question and contributing to a sense of disorder in images, making them not as aesthetically appealing.

While this may not be as pretty from an aesthetic perspective, using these in machine learning could make a model more robust and better able to classify things during non-ideal conditions.

-

nataliadubon

- Posts: 15

- Joined: Tue Mar 29, 2022 3:30 pm

Re: Report 3: Material / Machine-Generated Images, Data Noise

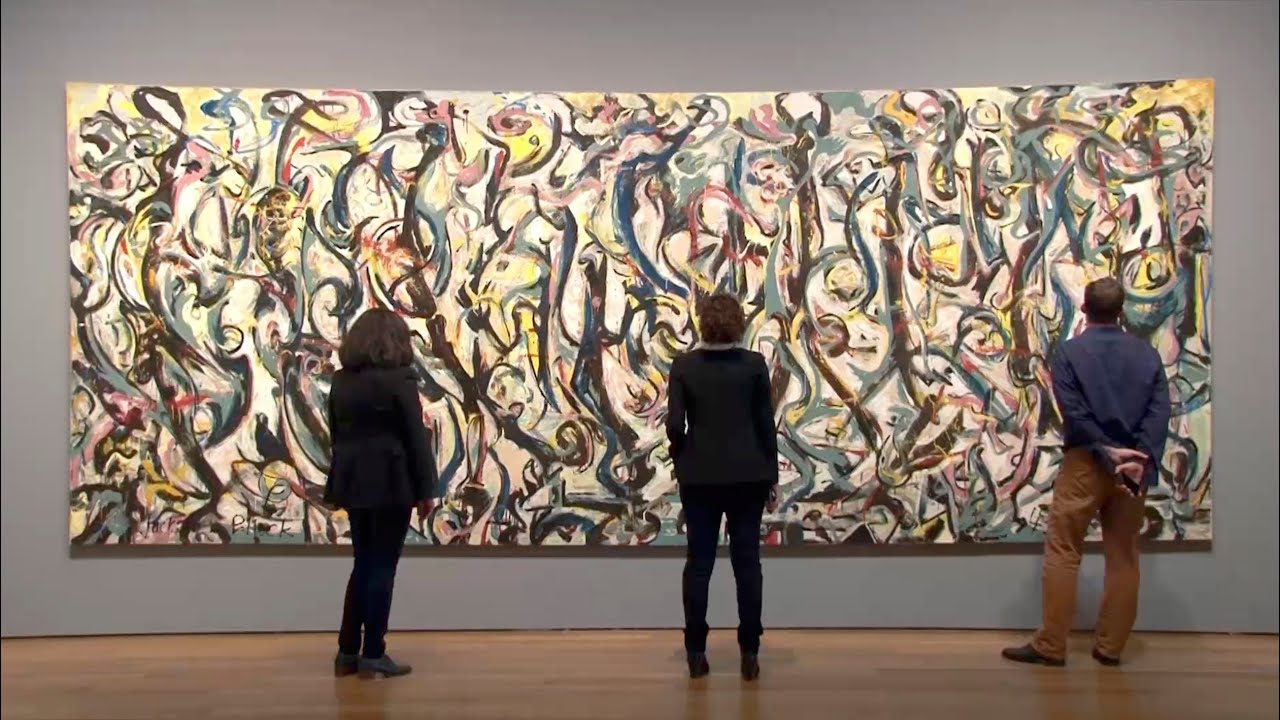

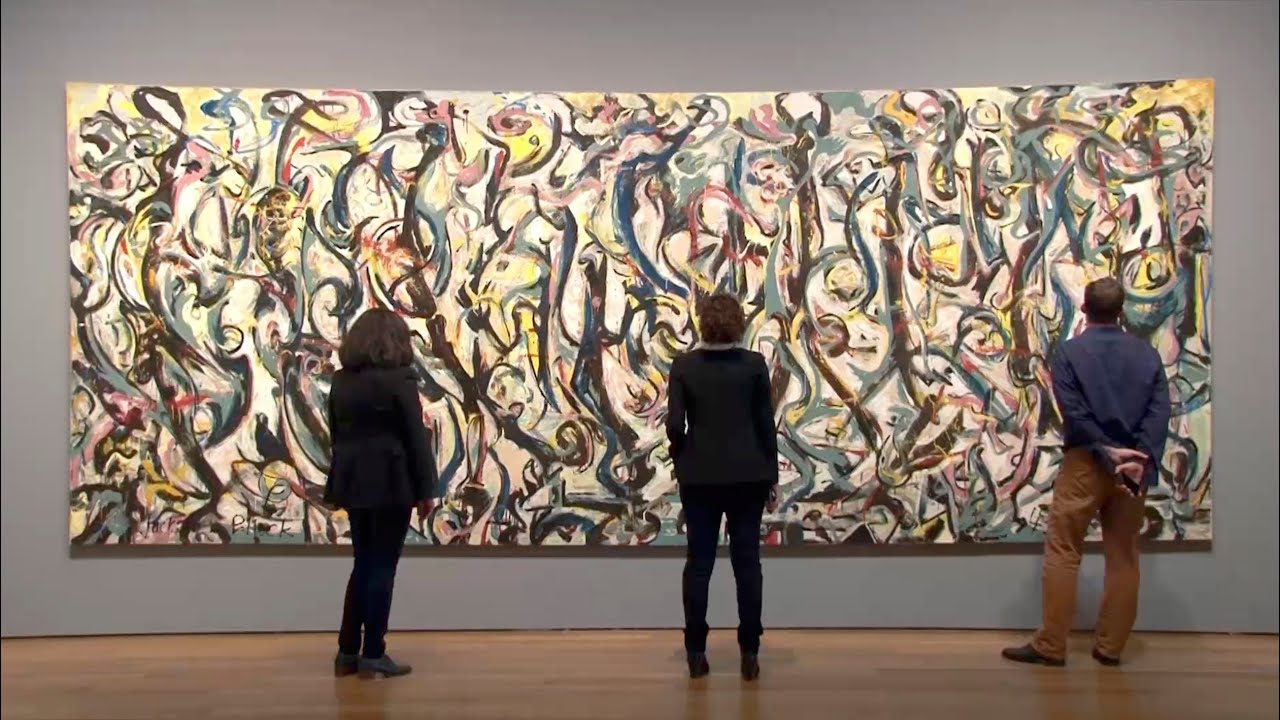

This past week we've touched upon briefly the rise of glitch art, which I've decided to explore further. Glitch art often feels like an exploitation of the conventions of fine art. However, though glitch art seems synonymous with chaos, it is often done purposefully with a goal in mind. Certain artistic elements that are center to glitch art (such as the liquify effect) have now been explored further in traditional art. Regardless, both digital and traditional art have shown to influence each other and draw inspiration from artists that prefer abstraction over perfection. Personally, the example that comes to mind is Jackson Pollock who was a pioneer during the abstract expressionist movement of the early to mid 1900s. Though Jackson Pollock worked with paint rather than a digital processing device, his liquifying technique shows some resemblance to the digital tool itself used amongst glitch artists.

By Jackson Pollock:

By Abihail Aarayno:

Though it is clear that the two artistic pieces are not identical and depict entirely different subjects and materials (one being oil paint and the other being digital), they both show a resemblance in their abstraction. Though viewers may make out an ocean wave on a sandy shore in the second image, using the liquify tool the artist is able to abstract the subject entirely. Glitch art, then, often presents itself as another outlet for the continuation of the abstract movement. Below is an image of how the liquifying technique is actually applied to glitch art.

Ultimately, the emergence of Adobe Photoshop has allowed for the accessibility and exploration of glitch art by providing advanced tools in liquidation, pixelation, and distortion. The beauty of glitch is that by definition and universal knowledge, glitch is essentially a mistake, an error. However, artists have been able to equip it purposefully to turn it into art. With that being said, I wanted to end this week's report with a memorable quote from Bob Ross that seems to be fitting with the movement of glitch art:

By Jackson Pollock:

By Abihail Aarayno:

Though it is clear that the two artistic pieces are not identical and depict entirely different subjects and materials (one being oil paint and the other being digital), they both show a resemblance in their abstraction. Though viewers may make out an ocean wave on a sandy shore in the second image, using the liquify tool the artist is able to abstract the subject entirely. Glitch art, then, often presents itself as another outlet for the continuation of the abstract movement. Below is an image of how the liquifying technique is actually applied to glitch art.

Ultimately, the emergence of Adobe Photoshop has allowed for the accessibility and exploration of glitch art by providing advanced tools in liquidation, pixelation, and distortion. The beauty of glitch is that by definition and universal knowledge, glitch is essentially a mistake, an error. However, artists have been able to equip it purposefully to turn it into art. With that being said, I wanted to end this week's report with a memorable quote from Bob Ross that seems to be fitting with the movement of glitch art:

References:We don't make mistakes. We just have happy accidents.

- “Away from the Easel: Jackson Pollock's Mural.” The Guggenheim Museums and Foundation, https://www.guggenheim.org/exhibition/a ... ocks-mural.

- “How Would You Accomplish This Warp/Distort/Liquify Effect in Photoshop?” Graphic Design Stack Exchange, 1 Dec. 1966, https://graphicdesign.stackexchange.com ... -photoshop.

- Latimer, Joe. “Glitch Art 101: Mostly Everything You Need to Know about Glitch Art - Joe Latimer: A Creative Digital Media Artist: Winter Park, FL.” Joe Latimer | A Creative Digital Media Artist | Winter Park, FL, 6 Aug. 2020, https://www.joelatimer.com/glitch-art-1 ... litch-art/.

- “Liquify by Abihail Sarayno.” Fine Art America, https://fineartamerica.com/featured/liq ... rayno.html.