I am especially interested in “living sculptures” or installations inspired by nature that use principles of biomimesis. One of the most relevant artists in this field for me is Neri Oxman, who has been working with insects and living materials to create art and design.

Based on this week’s resources, I selected the following works:

1. David Rokeby – San Marco Flow (Generative Video Installation, 2004)

San Marco Flow was an installation in Piazza San Marco in Venice, where movement and time were central elements. As people walked or moved through the space, the installation visualized their presence, leaving a kind of trace behind them that showed their past pathways. This generated the idea that as we move forward, we always leave traces behind.

What I found most interesting is that without movement, there is no image — elements that do not move remain invisible to the program.

The key concepts I identify are:

a) time and the traces we leave behind;

b) movement as evidence of life; and

c) data transformation to reveal the invisible.

2. Jim Campbell – Data Transformation 3 (2017)

Jim Campbell holds a B.S. in Electrical Engineering and Mathematics from the Massachusetts Institute of Technology. His work has been exhibited internationally in institutions such as the Whitney Museum of American Art (New York) and the San Francisco Museum of Modern Art, among others.

In this work, he transforms visual information by adding noise or effects that create the illusion of low resolution. The piece uses electronic devices such as LEDs to display images in a way that merges abstraction and data representation. As Campbell explains about Data Transformation 3 (2017):

“By reducing the resolution of the color side of the image, the two sides present similar amounts of information, with each side representing the data in a different way.”

What attracted my attention was how the transformation of movement captured in the videos completes the experience. The changing images make me reinterpret the same “reality” by focusing on different aspects of it.

The key elements I identify are:

a) visuals based on simple rules that generate complex results;

b) shifting focus to different aspects of the same information; and

c) inviting the viewer to change perspective — to “see with new eyes.”

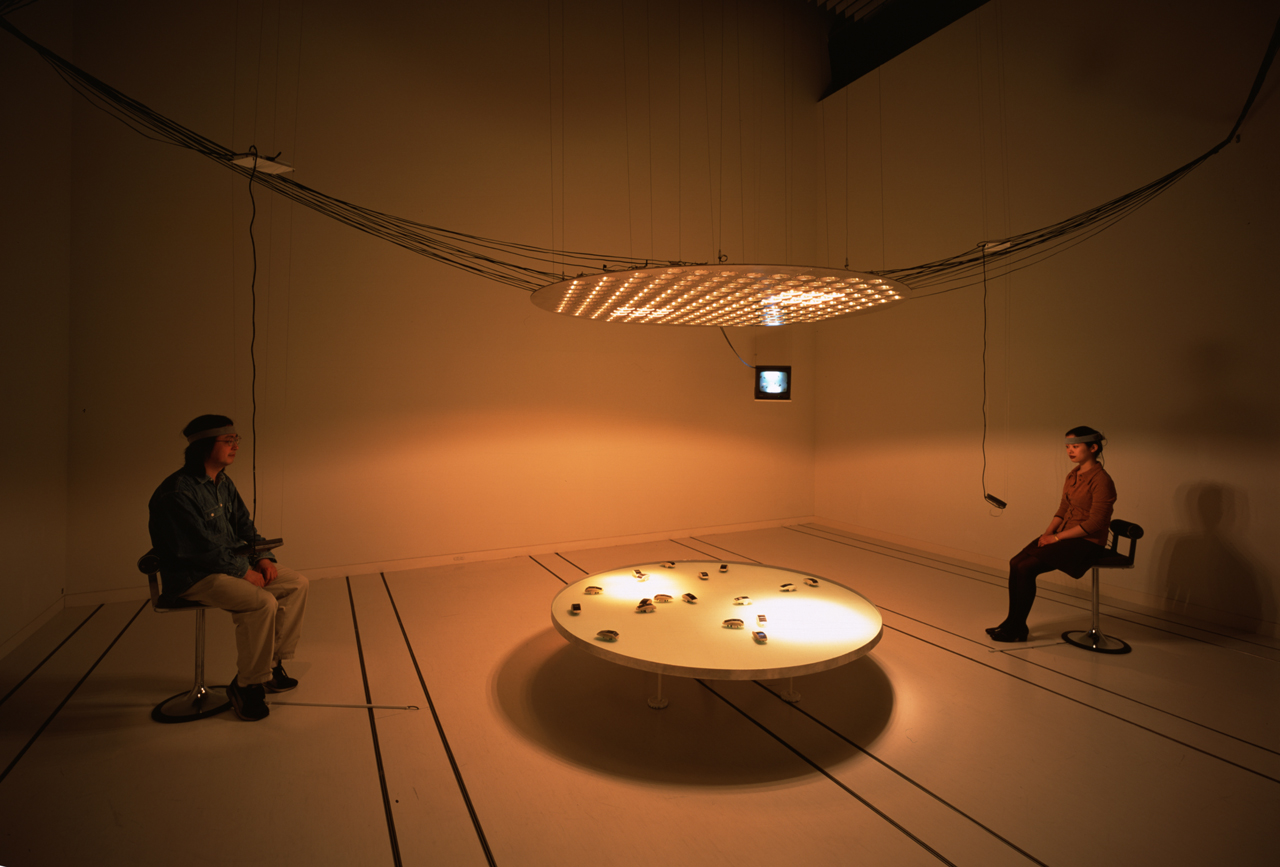

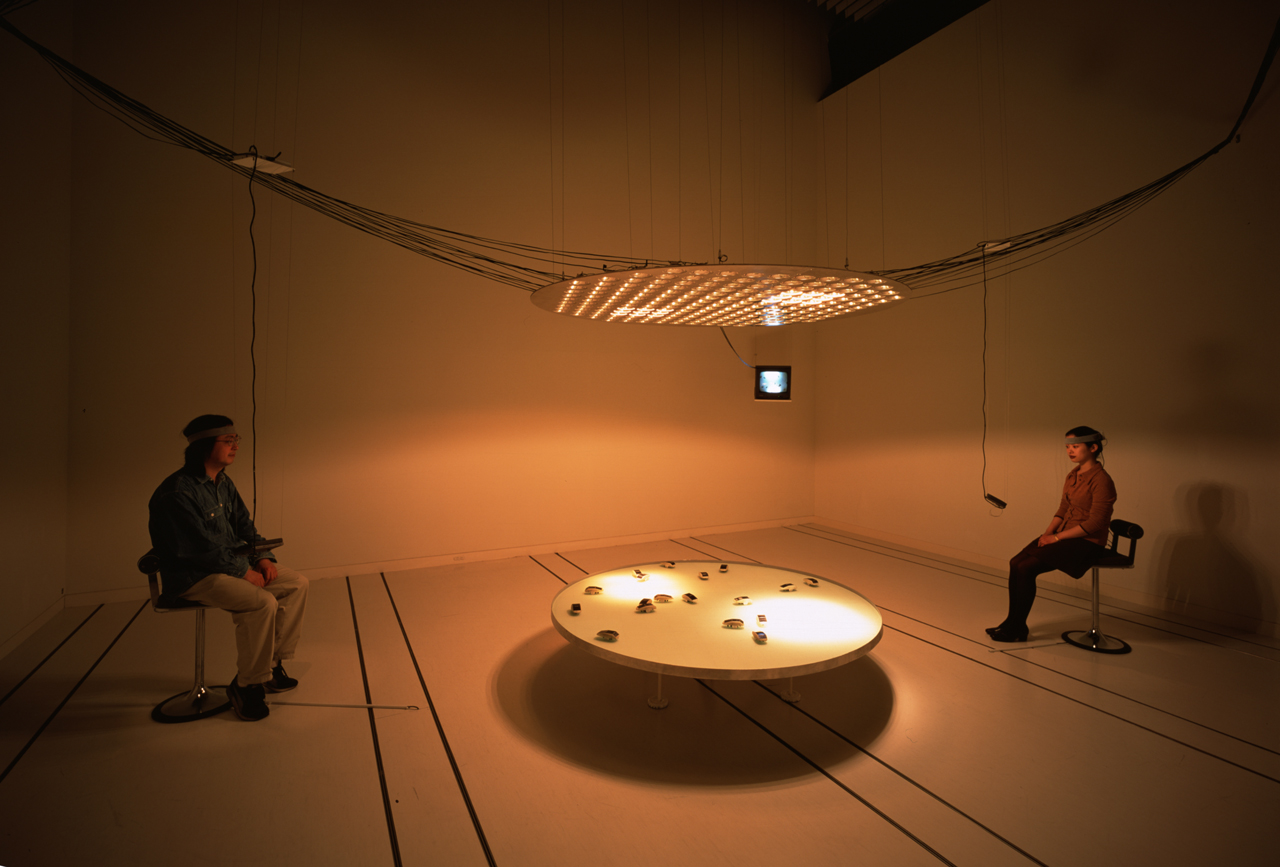

3. Ulrike Gabriel – terrain_02 (1997)

Ulrike Gabriel explores human reality through robotics, virtual reality (VR), installations, performative formats, and painting. In terrain_02, she worked with biodata and light-responsive devices.

Two participants sit in a nonverbal dialogue facing each other at a round table. They are connected via EEG interfaces to a system of solar-powered robots. Their brainwaves are measured, analyzed, and compared. The ratio between both frequencies is projected onto the robots through changing light intensities—from above using lamps and from below using electroluminescent sheets. The light controls the speed and behavior of the robots, activating or deactivating different areas of the terrain. Depending on the participants’ relationship and their inner responses to each other, unique motion patterns emerge.

I selected this piece because I am interested in biodata as well. It is fascinating to understand the work as one that evolves over time, depending on the dialogue between the participants. This dialogue is unique, as is their relationship. For me, it is important to see the interaction between the different elements: the display of small robots that begin to move because of light creates an internal narrative. Light induces movement and, as a consequence, sets the conditions for life.

The key concepts I identify are:

a) light as a source of life;

b) biodata as a tool to reveal relationships between humans and non-humans.